tagged by: generative AI

Emerging Patterns in Building GenAI Products

As we move software products using generative AI technology from proof-of-concepts into production systems, we are uncovering a range of common patterns. Evals play a central role in ensuring that these non-deterministic systems are operating within sensible boundaries. Large Language Models need enhancement to provide information beyond a generic and static training set. Most of the time we can do this with Retrieval Augmented Generation (RAG), although the basic RAG approach requires several patterns to overcome its limitations. When RAG isn't enough, Fine Tuning becomes worthwhile.

Exploring Generative AI

Generative AI and particularly LLMs (Large Language Models) have exploded into the public consciousness. Like many software developers Birgitta is intrigued by the possibilities, but unsure what exactly it will mean for our profession in the long run. She has taken on a role in Thoughtworks to coordinate our work on how this technology will affect software delivery practices. On this page she posts a series of memos to describe what she and our colleagues are learning and thinking.

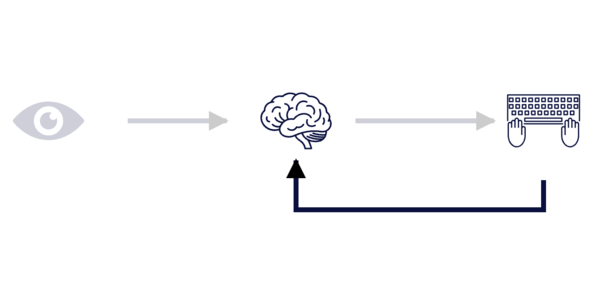

Conversation: LLMs and the what/how loop

A conversation between Unmesh, Rebecca, and Martin on how LLMs help us shape the abstractions in our software. We view our challenge as building systems that survive change, requiring us to manage our cognitive load. We can do this by mapping the “what” of we want our software to do into the “how” of programming languages. This “what” and “how” are built up in a feedback loop. TDD helps us operationalize that loop, and LLMs allow us to explore that loop in an informal and more fluid manner.

How far can we push AI autonomy in code generation?

We ran a series of experiments to explore how far Generative AI can currently be pushed toward autonomously developing high-quality, up-to-date software without human intervention. As a test case, we created an agentic workflow to build a simple Spring Boot application end to end. We found that the workflow could ultimately generate these simple applications, but still observed significant issues in the results—especially as we increased the complexity. The model would generate features we hadn't asked for, make shifting assumptions around gaps in the requirements, and declare success even when tests were failing. We concluded that while many of our strategies — such as reusable prompts or a reference application — are valuable for enhancing AI-assisted workflows, a human in the loop to supervise generation remains essential.

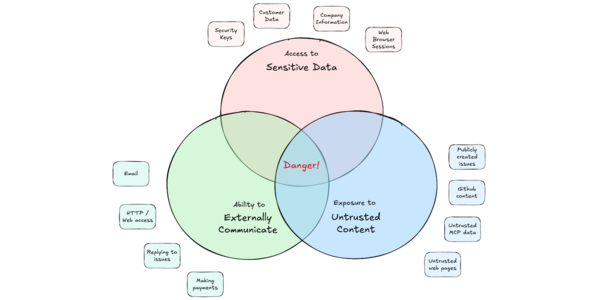

Agentic AI and Security

Agentic AI systems present unique security challenges. The fundamental security weakness of LLMs is that there is no rigorous way to separate instructions from data, so anything they read is potentially an instruction. This leads to the “Lethal Trifecta”: sensitive data, untrusted content, and external communication - the risk that the LLM will read hidden instructions that leak sensitive data to attackers. We need to take explicit steps to mitigate this risk by minimizing access to each of these three elements. It is valuable to run LLMs inside controlled containers and break up tasks so that each sub-task blocks at least one of the trifecta. Above all do small steps that can be controlled and reviewed by humans.

The Learning Loop and LLMs

LLMs are useful because they lower the threshold for experimentation. But we have to beware that we don't use them to try to shortcut the learning loop that's an essential part of a software developer's practice. We have seen this problem with tools like low-code platforms, they provide a rapid burst of initial development, but we cannot sustain them because they undermine the learning required for sustained development capability.

Conversation with Gergely Orosz (Pragmatic Engineer Podcast)

I've become quite the admirer of Gergely Orosz's work over the last few years, both his newsletter and his podcast. He produces penetrating insights on how to thrive in the software industry. So I was chuffed to be invited to his podcast while I was in Amsterdam. Naturally the role of LLMs in software development takes up much of the conversation. I talk about some of the ways it certainly helps (understanding legacy systems, exploratory prototypes) and the dangerous areas (lethal trifecta). We also delve into my career with Thoughtworks, how we produce the Thoughtworks Technology Radar, and my thoughts about how best to learn in this dynamic environment.

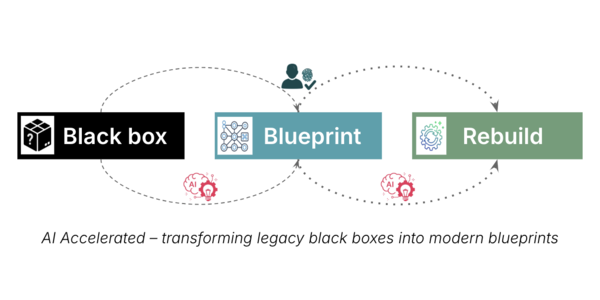

From Black Box to Blueprint

A common enterprise problem: crucial legacy systems become “black boxes”—key to operations but opaque and risky to touch. We worked with a client to use AI-assisted reverse engineering to reconstruct functional specifications from UI elements, binaries, and data lineage to overcome analysis paralysis. We developed a methodical “multi-lens” approach—starting from visible artifacts, enriching incrementally, triangulating logic, and always preserving lineage. Human validation remains central to ensure accuracy and confidence in extracted functionality. This engagement revealed that turning a system from black box to blueprint empowers modernization decisions and accelerates migration efforts.

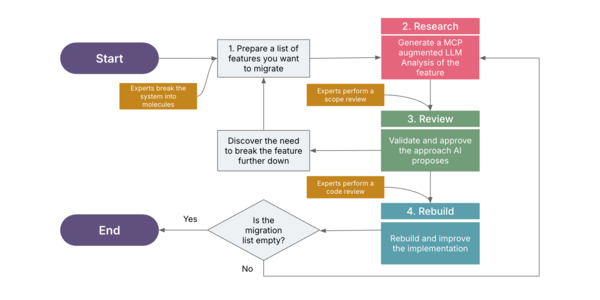

Research, Review, Rebuild

The Bahmni open-source hospital management system started over nine years ago with a front end using AngularJS and an OpenMRS REST API. We wished to convert this to use a React + TypeScript front end with an HL7 FHIR API. In exploring how to do this modernization we used a structured prompting workflow of Research, Review, and Rebuild - together with Cline, Claude 3.5 Sonnet, Atlassian MCP server, and a filesystem MCP server. Changing a single control would normally take 3–6 days of manual effort, but with these tools was completed in under an hour at a cost of under $2.

Building your own CLI Coding Agent with Pydantic-AI

CLI coding agents are a fundamentally different tool to chatbots or autocomplete tools - they're agents that can read code, run tests, and update a codebase. While commercial tools are impressive, they don't understand the particular context of our environment and the eccentricities of our specific project. Instead we can build our own coding agent by assembling open source tools, using our specific development standards for: testing, documentation production, code reasoning, and file system operations.

Who is LLM?

Is an LLM a stubborn donkey, a genie, a slot machine, or Uriah Heep?

Conversation: LLMs and Building Abstractions

Unmesh and Martin exchanged some emails about building abstractions while working with an LLM. They talk about the influence of Brooks's framing of essential and accidental complexity, and how this carries over to thinking of programming as both growing and applying abstractions. An LLM is useful in both modes of working, but has to be used differently. We can't reduce growing abstractions to a static prompt, instead we have to learn to create a shared vocabulary iteratively with the LLM.

Some thoughts on LLMs and Software Development

I’m about to head away from looking after this site for a few weeks (part vacation, part work stuff). As I contemplate some weeks away from the daily routine, I feel an urge to share some scattered thoughts about the state of LLMs and AI.

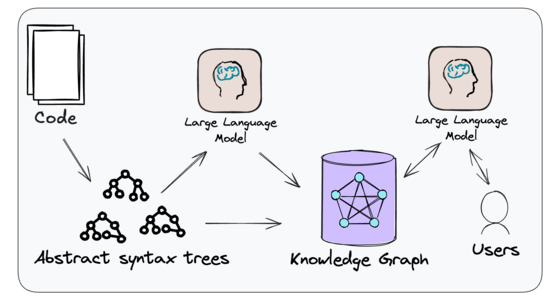

Legacy Modernization meets GenAI

So far, most attention to Generative Artificial Intelligence (GenAI) in software development is on generating code. But we believe there is as much, if not more, value in understanding existing code - particularly long-lived, large, and complex legacy systems. We have been experimenting with GenAI for modernization with our clients, embodied in a tool called CodeConcise, which combines a Large Language Model (LLM) with a knowledge graph derived from the abstract syntax trees of a codebase. We have seen positive results from this approach in both drawing out low-level requirements and building a high-level explanation of a system.

The DeepSeek Series: A Technical Overview

The appearance of DeepSeek Large-Language Models has caused a lot of discussion and angst since their latest versions appeared at the beginning of 2025. But much of the value of DeepSeek's work comes from the papers they have published over the last year. This article provides an overview of these papers, highlighting three main arcs in this research: a focus on improving cost and memory efficiency, the use of HPC Co-Design to train large models on limited hardware, and the development of emergent reasoning from large-scale reinforcement learning

LLMs bring new nature of abstraction

Like most loudmouths in this field, I've been paying a lot of attention to the role that generative AI systems may play in software development. I think the appearance of LLMs will change software development to a similar degree as the change from assembler to the first high-level programming languages. The further development of languages and frameworks increased our abstraction level and productivity, but didn't have that kind of impact on the nature of programming. LLMs are making that degree of impact, but with the distinction that it isn't just raising the level of abstraction, but also forcing us to consider what it means to program with non-deterministic tools.

Where Is SW Development Going?

I was on a panel at goto Copenhagen with Holly Cummings, Trisha Gee, Dave Farley, and Daniel Terhorst-North. We discussed the current state of software development and where it was heading. Given the timing, there was much discussion about the role AI would play in our profession's future.

Function calling using LLMs

While LLMs excel at generating cogent text based on their training data, they may also need to interact with external systems. Function calling allows them to construct such calls. The LLM does not execute these calls directly, instead it creates a data structure that describes the call, passing that to a separate program for execution and further processing. The LLM's prompt includes details about possible function calls and when they should be used.

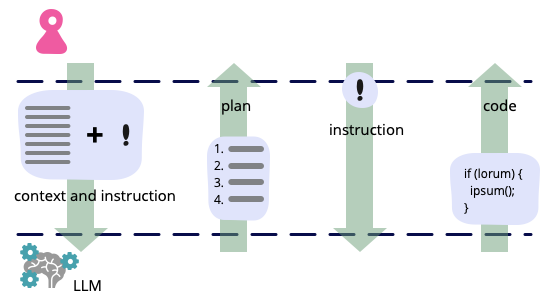

An example of LLM prompting for programming

My account of an internal chat with Xu Hao, where he shows how he drives ChatGPT to produce useful self-tested code. His initial prompt primes the LLM with an implementation strategy (chain of thought prompting). His prompt also asks for an implementation plan rather than code (general knowledge prompting). Once he has the plan he uses it to refine the implementation and generate useful sections of code.

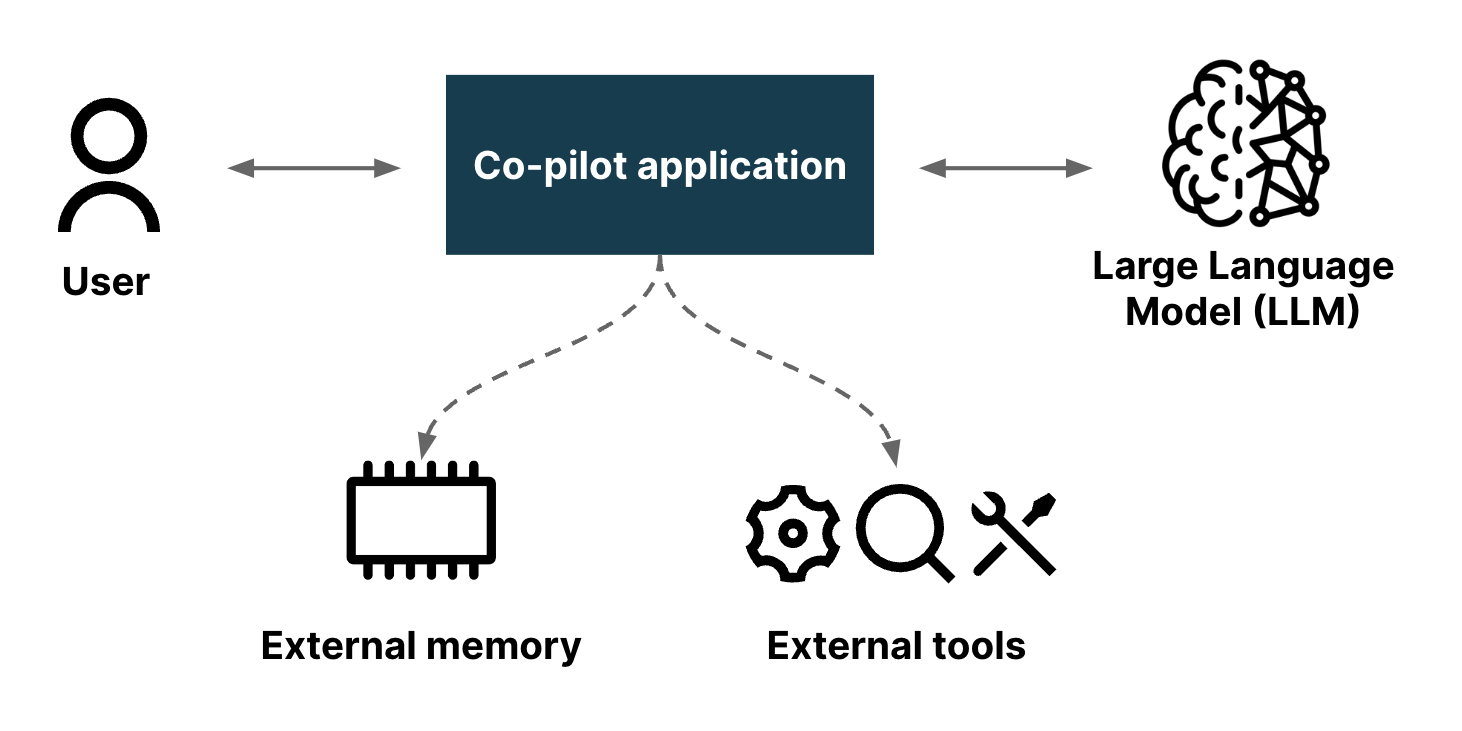

Building Boba AI

We are building an experimental AI co-pilot for product strategy and generative ideation called “Boba”. Along the way, we’ve learned some useful lessons on how to build these kinds of applications, which we’ve formulated in terms of patterns. These patterns allow an application to help the user interact more effectively with a Large-Language Model (LLM), orchestrating prompts to gain better results, helping the user navigate a path of an intricate conversational flow, and integrating knowledge that the LLM doesn't have available.

Engineering Practices for LLM Application Development

LLM engineering involves much more than just prompt design or prompt engineering. In this article, we share a set of engineering practices that helped us deliver a prototype LLM application rapidly and reliably in a recent project. We'll share techniques for automated testing and adversarial testing of LLM applications, refactoring, as well as considerations for architecting LLM applications and responsible AI.

Using ChatGPT as a technical writing assistant

An experienced technical author explores using ChatGPT to assist with a number of writing projects. He finds ChatGPT can provide time-savings through drafts and prompting for additional content, but lacks accuracy and depth - as well as suffering from bubbly optimism. Overall it is useful if you work iteratively, asking for small chunks with well-crafted prompts.

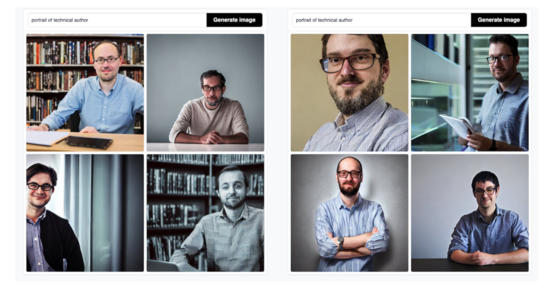

What Does a Technical Author Look Like?

Asking Stable Diffusion for "portrait of technical author"

Instead of restricting AI and algorithms, make them explainable

The steady increase in deployment of AI tools has led a lot of people concerned about how software makes decisions that affect our lives. In one example, its about “algorithmic” feeds in social media that promote posts that drive engagement. A more serious impact can come from business decisions, such as how much premium to charge in car insurance. This can extend to affecting legal decisions, such as suggesting sentencing guidelines to judges.

Agentic Email

I've heard a number of reports recently about people setting up LLM agents to work on their email and other communications. The LLM has access to the user's email account, reads all the emails, decides which emails to ignore, drafts some emails for the user to approve, and replies to some emails autonomously. It can also hook into a calendar, confirming, arranging, or denying meetings.