during: 2025

My posts on BoardGameGeek

Most of my writing about board games is done on BoardGameGeek (BGG), a specialized website for those like me who are enthusiastic about the world of hobby board games. I like using BGG's blogs, as it naturally reaches a wide audience of enthusiasts. But one thing it lacks is a decent way of indexing blogs and other writings on its site. So this page is such an index, collecting together my blog posts and other substantial things I write on that site.

My Favorite board games

Should a disaster destroy my collection, these are the board games I would strive to obtain again. I haven't ranked them, as trying to rank games feels arbitrary, although it's also fun. Instead I think of these as the games I would award a star to, were I a boardgaming Michelin guide.

Conversation with Gergely Orosz (Pragmatic Engineer Podcast)

I've become quite the admirer of Gergely Orosz's work over the last few years, both his newsletter and his podcast. He produces penetrating insights on how to thrive in the software industry. So I was chuffed to be invited to his podcast while I was in Amsterdam. Naturally the role of LLMs in software development takes up much of the conversation. I talk about some of the ways it certainly helps (understanding legacy systems, exploratory prototypes) and the dangerous areas (lethal trifecta). We also delve into my career with Thoughtworks, how we produce the Thoughtworks Technology Radar, and my thoughts about how best to learn in this dynamic environment.

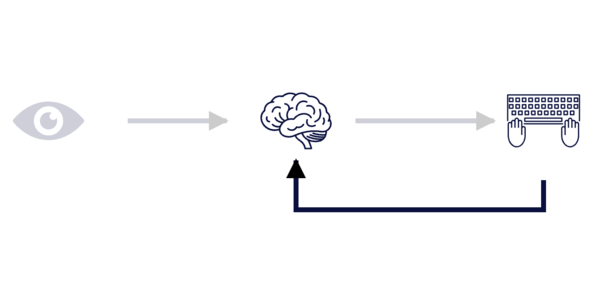

The Learning Loop and LLMs

LLMs are useful because they lower the threshold for experimentation. But we have to beware that we don't use them to try to shortcut the learning loop that's an essential part of a software developer's practice. We have seen this problem with tools like low-code platforms, they provide a rapid burst of initial development, but we cannot sustain them because they undermine the learning required for sustained development capability.

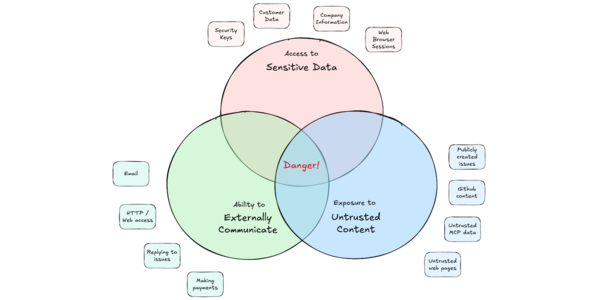

Agentic AI and Security

Agentic AI systems present unique security challenges. The fundamental security weakness of LLMs is that there is no rigorous way to separate instructions from data, so anything they read is potentially an instruction. This leads to the “Lethal Trifecta”: sensitive data, untrusted content, and external communication - the risk that the LLM will read hidden instructions that leak sensitive data to attackers. We need to take explicit steps to mitigate this risk by minimizing access to each of these three elements. It is valuable to run LLMs inside controlled containers and break up tasks so that each sub-task blocks at least one of the trifecta. Above all do small steps that can be controlled and reviewed by humans.

Conversation with James Lewis from goto 2024

During the goto Copenhagen conference in 2024, I had a conversation on stage with my friend and colleague James Lewis. He asked my about how I got involved in the early days of Agile Software Development, the importance of close collaboration between developers and users, and the origin of my essay “Is Design Dead” on the role of evolutionary architecture. We also opine on some audience questions including pair programming, the nature of developer productivity, and - inevitably - our thoughts on the role of Gen AI in programming at that time.

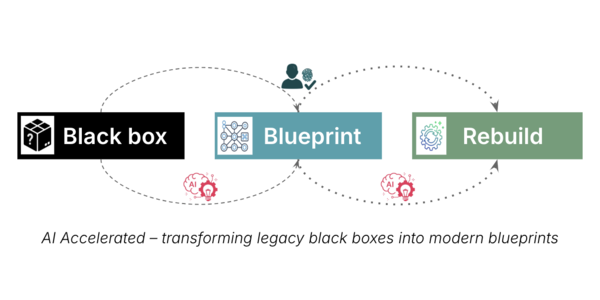

From Black Box to Blueprint

A common enterprise problem: crucial legacy systems become “black boxes”—key to operations but opaque and risky to touch. We worked with a client to use AI-assisted reverse engineering to reconstruct functional specifications from UI elements, binaries, and data lineage to overcome analysis paralysis. We developed a methodical “multi-lens” approach—starting from visible artifacts, enriching incrementally, triangulating logic, and always preserving lineage. Human validation remains central to ensure accuracy and confidence in extracted functionality. This engagement revealed that turning a system from black box to blueprint empowers modernization decisions and accelerates migration efforts.

Some thoughts on LLMs and Software Development

I’m about to head away from looking after this site for a few weeks (part vacation, part work stuff). As I contemplate some weeks away from the daily routine, I feel an urge to share some scattered thoughts about the state of LLMs and AI.

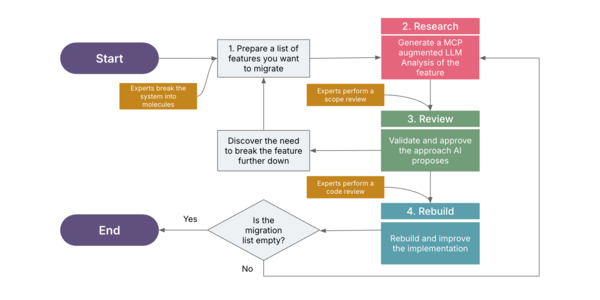

Research, Review, Rebuild

The Bahmni open-source hospital management system started over nine years ago with a front end using AngularJS and an OpenMRS REST API. We wished to convert this to use a React + TypeScript front end with an HL7 FHIR API. In exploring how to do this modernization we used a structured prompting workflow of Research, Review, and Rebuild - together with Cline, Claude 3.5 Sonnet, Atlassian MCP server, and a filesystem MCP server. Changing a single control would normally take 3–6 days of manual effort, but with these tools was completed in under an hour at a cost of under $2.

Building your own CLI Coding Agent with Pydantic-AI

CLI coding agents are a fundamentally different tool to chatbots or autocomplete tools - they're agents that can read code, run tests, and update a codebase. While commercial tools are impressive, they don't understand the particular context of our environment and the eccentricities of our specific project. Instead we can build our own coding agent by assembling open source tools, using our specific development standards for: testing, documentation production, code reasoning, and file system operations.

Conversation: LLMs and Building Abstractions

Unmesh and Martin exchanged some emails about building abstractions while working with an LLM. They talk about the influence of Brooks's framing of essential and accidental complexity, and how this carries over to thinking of programming as both growing and applying abstractions. An LLM is useful in both modes of working, but has to be used differently. We can't reduce growing abstractions to a static prompt, instead we have to learn to create a shared vocabulary iteratively with the LLM.

Expansion Joints

Back in the days when I did live talks, one of my abilities was to finish on time, even if my talk time was cut at the last moment (perhaps due to the prior speaker running over). The key to my ability to do this was to use Expansion Joints - parts of the talk that I'd pre-planned so I could cover them quickly or slowly depending on how much time I had.

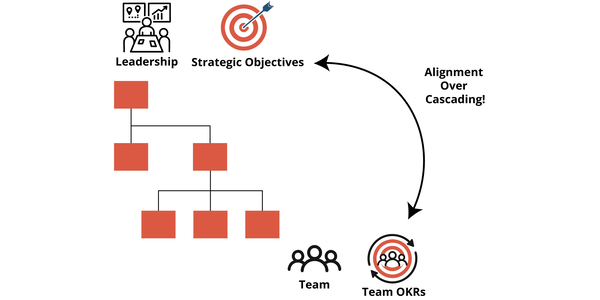

Team OKRs in Action

OKRs have become a popular way to connect strategy with execution in large organizations. But when they are set in a top‑down cascade, they often lose their meaning. Teams receive objectives they didn’t help create, and the result is weak commitment and little real change. High‑performing teams work in another way. They define their own objectives in an organization that uses a collaborative process to align the team’s OKRs with the broader strategy. With these Team OKRs in place, they create a shared purpose and become the base for a regular cycle of planning, check‑ins, and retrospectives.

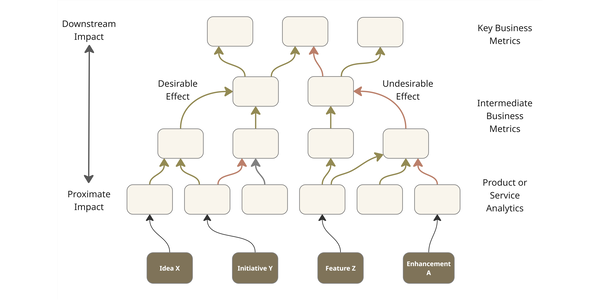

The Reformist CTO’s Guide to Impact Intelligence

The productivity of knowledge workers is hard to quantify and often decoupled from direct business outcomes. The lack of understanding leads to many initiatives, bloated tech spend, and ill-chosen efforts to improve this productivity. Technology leaders need to avoid this by developing an intelligence of the business impact of their work across a network connecting output to proximate and downstream impact. We can do this by introducing robust demand management, paying down measurement debt, introducing impact validation, and equipping delivery teams to build a picture of how their work translates to business impact.

How far can we push AI autonomy in code generation?

We ran a series of experiments to explore how far Generative AI can currently be pushed toward autonomously developing high-quality, up-to-date software without human intervention. As a test case, we created an agentic workflow to build a simple Spring Boot application end to end. We found that the workflow could ultimately generate these simple applications, but still observed significant issues in the results—especially as we increased the complexity. The model would generate features we hadn't asked for, make shifting assumptions around gaps in the requirements, and declare success even when tests were failing. We concluded that while many of our strategies — such as reusable prompts or a reference application — are valuable for enhancing AI-assisted workflows, a human in the loop to supervise generation remains essential.

Exploring Generative AI

Generative AI and particularly LLMs (Large Language Models) have exploded into the public consciousness. Like many software developers Birgitta is intrigued by the possibilities, but unsure what exactly it will mean for our profession in the long run. She has taken on a role in Thoughtworks to coordinate our work on how this technology will affect software delivery practices. On this page she posts a series of memos to describe what she and our colleagues are learning and thinking.

Who is LLM?

Is an LLM a stubborn donkey, a genie, a slot machine, or Uriah Heep?

Expert Generalists

As computer systems get more sophisticated we've seen a growing trend to value deep specialists. But we've found that our most effective colleagues have a skill in spanning many specialties. We are thus starting to explicitly recognize this as a first-class skill of “Expert Generalist”. We can identify the key characteristics of people with this skill - and thus recruit and promote based on it. We have started to design workshops to train this skill, which is one we think becomes more valuable with arrival of LLMs and similar AI tools into our profession.

LLMs bring new nature of abstraction

Like most loudmouths in this field, I've been paying a lot of attention to the role that generative AI systems may play in software development. I think the appearance of LLMs will change software development to a similar degree as the change from assembler to the first high-level programming languages. The further development of languages and frameworks increased our abstraction level and productivity, but didn't have that kind of impact on the nature of programming. LLMs are making that degree of impact, but with the distinction that it isn't just raising the level of abstraction, but also forcing us to consider what it means to program with non-deterministic tools.

The DeepSeek Series: A Technical Overview

The appearance of DeepSeek Large-Language Models has caused a lot of discussion and angst since their latest versions appeared at the beginning of 2025. But much of the value of DeepSeek's work comes from the papers they have published over the last year. This article provides an overview of these papers, highlighting three main arcs in this research: a focus on improving cost and memory efficiency, the use of HPC Co-Design to train large models on limited hardware, and the development of emergent reasoning from large-scale reinforcement learning

Say Your Writing

Here's one of the best tips I know for writers, which was told to me by Bruce Eckel.

Once you've got a reasonable draft, read it out loud. By doing this you'll find bits that don't sound right, and need to fix. Interestingly, you don't actually have to vocalize (thus making a noise) but your lips have to move.

Early Days of Agile Development & Is Design Dead?

At goto copenhagen last year, my friend James Lewis interviewed me. I talk about when I learned about iterative design from Kent Beck, the dangers of product owners interfering with business-developer communication, and writing the agile manifesto. During this he specifically asked about my essay Is Design Dead. There's also a some audience questions asking if pair programming is a bad thing for introverts like us (no), and (inevitably) the role of LLMs for programmers today.

Threat Modeling Guide for Software Teams

Threat modeling is a systems engineering practice where teams examine how data flows through systems to identify what can go wrong - a deceptively simple act that reveals security risks that automated tools cannot anticipate. Rather than conducting security analysis as a separate or upfront activity, teams should integrate threat modeling into their development process through small, regular activities. The article helps teams get started and develop their practice using different approaches across application development, and infrastructure. Given increasing cyber security risks and growing enterprise liability awareness, this practice is more crucial than ever.

Function calling using LLMs

While LLMs excel at generating cogent text based on their training data, they may also need to interact with external systems. Function calling allows them to construct such calls. The LLM does not execute these calls directly, instead it creates a data structure that describes the call, passing that to a separate program for execution and further processing. The LLM's prompt includes details about possible function calls and when they should be used.

Social Media Engagement in Early 2025

Comparing engagement on two dozen recent social media posts

I've been kidnapped by Robert Caro

His books are huge, but I can't put them down.

Emerging Patterns in Building GenAI Products

As we move software products using generative AI technology from proof-of-concepts into production systems, we are uncovering a range of common patterns. Evals play a central role in ensuring that these non-deterministic systems are operating within sensible boundaries. Large Language Models need enhancement to provide information beyond a generic and static training set. Most of the time we can do this with Retrieval Augmented Generation (RAG), although the basic RAG approach requires several patterns to overcome its limitations. When RAG isn't enough, Fine Tuning becomes worthwhile.

Forest And Desert

The Forest and the Desert is a metaphor for thinking about software development processes, developed by Beth Andres-Beck and hir father Kent Beck. It posits that two communities of software developers have great difficulty communicating to each other because they live in very different contexts, so advice that applies to one sounds like nonsense to the other.

Where Is SW Development Going?

I was on a panel at goto Copenhagen with Holly Cummings, Trisha Gee, Dave Farley, and Daniel Terhorst-North. We discussed the current state of software development and where it was heading. Given the timing, there was much discussion about the role AI would play in our profession's future.

Growing the Development Forest

Luca Rossi hosts a podcast (and newsletter) called Refactoring, so it's obvious that we have some interests in common. The tile comes from me leaning heavily on Beth Anders-Beck and Kent Beck's metaphor of The Forest and The Desert. We talk about the impact of AI on software development, the metaphor of technical debt, and the current state of agile software development.

Refactoring with Codemods to Automate API Changes

Refactoring is something developers do all the time—making code easier to understand, maintain, and extend. While IDEs can handle simple refactorings with just a few keystrokes, things get tricky when you need to apply changes across large or distributed codebases, especially those you don’t fully control. That’s where codemods come in. By using Abstract Syntax Trees (AST), codemods allow you to automate large-scale code changes with precision and minimal effort, making them especially useful when dealing with breaking API changes. This article looks at how codemods can help manage these challenges, with practical examples like removing feature toggles or refactoring complex React components. We’ll also discuss potential pitfalls and how to avoid them when using codemods at scale.