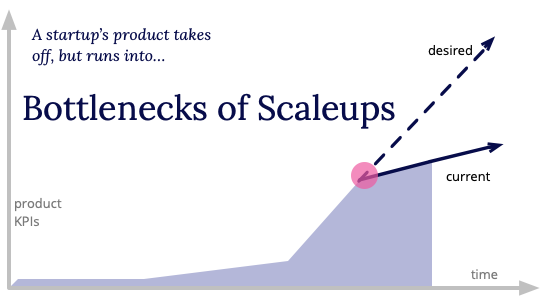

How did you get into the bottleneck?

The most common scaling bottleneck we encounter is technical debt — startups regularly state that tech debt is their main impediment to growth. The term “tech debt” tends to be used as a catch-all term, generally indicating that the technical platform and stack needs improvement. They’ve seen feature development slow down, quality issues, or engineering frustration. The startup team attributes it to technical debt incurred due to a lack of technical investment during their growth phase. An analysis is required to figure out the type and scale of the tech debt. It could be that the code quality is bad, an older language or framework is used, or the deployment and operation of the product isn’t fully automated. The solution strategy might be slight changes to the teams’ process or starting an initiative to rebuild parts of the application.

It’s important to say that prudent technical debt is healthy and desired, especially in the initial phases of a startup’s journey. Startups should trade technical aspects such as quality or robustness for product delivery speed. This will get the startup to its first goal – a viable business model, a proven product and customers that love the product. But as the company looks to scale up, we have to address the shortcuts taken, or it will very quickly affect the business.

Let’s examine a couple of examples we’ve encountered.

Company A – A startup has built an MVP that has shown enough evidence (user traffic, user sentiment, revenue) for investors and secured the next round of funding. Like most MVPs, it was built to generate user feedback rather than high-quality technical architecture. After the funding, instead of rebuilding that pilot, they build upon it, keeping the traction by focusing on features. This may not be an immediate problem since the startup has a small senior team that knows the sharp edges and can put in bandaid solutions to keep the company afloat.

The issues start to arise when the team continues to focus on feature development and the debt isn’t getting paid down. Over time, the low-quality MVP becomes core components, with no clear path to improve or replace them. There is friction to learn, work, and support the code. It becomes increasingly difficult to expand the team or the feature set effectively. The engineering leaders are also very nervous about the attrition of the original engineers and losing the knowledge they have.

Eventually, the lack of technical investment comes to a head. The team becomes paralyzed, measured in lower velocity and team frustration. The startup has to rebuild significantly, meaning feature development has to slow down, allowing competitors to catch up.

Company B – The company was founded by ex-engineers and they wanted to do everything “right.” It was built to scale out of the box. They used the latest libraries and programming languages. It has a finely grained architecture, allowing each part of the application to be implemented with different technologies, each optimized to scale perfectly. As a result, it will easily be able to handle hyper growth when the company gets there.

The issue with this example is that it took a long time to create, feature development was slow, and many engineers spent time working on the platform rather than the product. It was also hard to experiment — the finely grained architecture meant ideas that didn’t fit into an existing service architecture were challenging to do. The company didn’t realize the value of the highly scalable architecture because it was not able to find a product-market fit to reach that scale of customer base.

These are two extreme examples, based on an amalgamation of various clients with whom the startup teams at Thoughtworks have worked. Company A got itself into a technical debt bottleneck that paralyzed the company. Company B over-engineered a solution that slowed down development and crippled its ability to pivot quickly as it learnt more.

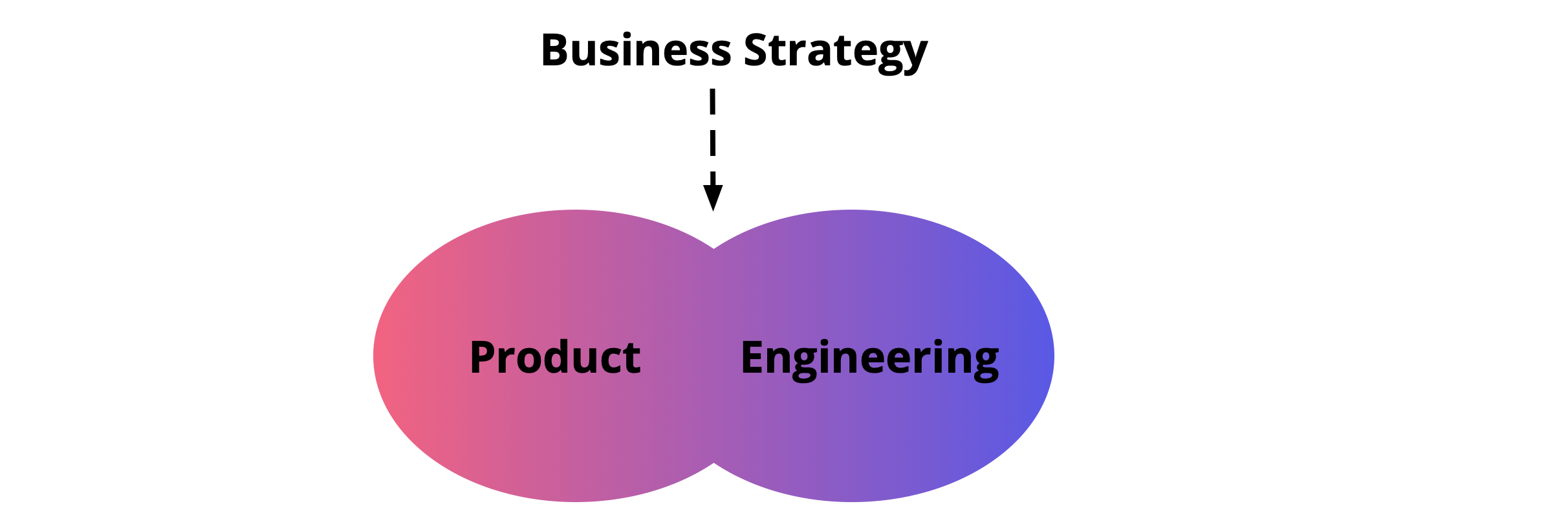

The theme with both is an inability to find the right balance of technical investment vs. product delivery. Ideally we want to leverage the use of prudent technical debt to power rapid feature development and experimentation. When the ideas are found to be valuable, we should pay down that technical debt. While this is very easily stated, it can be a challenge to put into practice.

To explore how to create the right balance, we are going to examine the different types of technical debt:

Typical types of debt:

Technical debt is an ambiguous term, often regarded as purely code-related. For this discussion, we’re going to use technical debt to mean any technical shortcut, where we’re trading long-term investment into a technical platform for short-term feature development.

- Code quality

- Code that is brittle, hard to test, hard to understand, or poorly documented will make all development and maintenance tasks slower and will degrade the “enjoyment” of writing code while demotivating engineers. Another example is a domain model and associated data model that doesn’t fit the current business model, resulting in workarounds.

- Testing

- A lack of unit, integration, or E2E tests, or the wrong distribution (see test pyramid). The developer can’t quickly get confidence that their code will not break existing functionality and dependencies. This leads to developers batching changes and a reduction of deployment frequency. Larger increments are harder to test and will often result in more bugs.

- Coupling

- Between modules (often happens in a monolith), teams potentially block each other, thus reducing the deployment frequency and increasing lead time for changes. One solution is to pull out services into microservices, which comes with it’s own complexity — there can be more straightforward ways of setting clear boundaries within the monolith.

- Unused or low value features

- Not typically thought of as technical debt, but one of the symptoms of tech debt is code that is hard to work with. More features creates more conditions, more edge cases that developers have to design around. This erodes the delivery speed. A startup is experimenting. We should always make sure to go back and re-evaluate if the experiment (the feature) is working, and if not, delete it. Emotionally, it can be very difficult for teams to make a judgment call, but it becomes much easier when you have objective data quantifying the feature value.

- Out of date libraries or frameworks

- The team will be unable to take advantage of new improvements and remain vulnerable to security problems. It will result in a skills problem, slowing down the onboarding of new hires and frustrating current developers who are forced to work with older versions. Additionally, those legacy frameworks tend to limit further upgrades and innovation.

- Tooling

- Sub-optimum third-party products or tools that require a lot of maintenance. The landscape is ever-changing, and more efficient tooling may have entered the market. Developers also naturally want to work with the most efficient tools. The balance between buying vs. building is complex and needs reassessment with the remaining debt in consideration.

- Reliability and performance engineering problems

- This can affect the customer experience and the ability to scale. We have to be careful, as we have seen wasted effort in premature optimization when scaling for a hypothetical future situation. It’s better to have a product proven to be valuable with users than an unproven product that can scale. We’ll describe this in more detail in the piece on “Scaling Bottleneck: Built without reliability and observability in mind”.

- Manual processes

- Part of the product delivery workflow isn’t automated. This could be steps in the developer workflow or things related to managing the production system. A warning: this can also go the other way when you spend a lot of time automating something that is not used enough to be worth the investment.

- Automated deployments

- Early stage startups can get away with a simple setup, but this should be addressed very soon — small incremental deployments power experimental software delivery. Use the four key metrics as your guide post. You should have the ability to deploy at will, usually at least once a day.

- Knowledge sharing

- Lack of useful information is a form of technical debt. It makes it difficult for new employees and dependent teams to get up to speed. As standard practice, development teams should produce concisely written technical documentation, API Specifications, and architectural decision records. It should also be discoverable via a developer portal or search engine. An anti-pattern is no moderation and deprecation process to ensure quality.

Is that really technical debt or functionality?

Startups often tell us about being swamped with technical debt, but under examination they’re really referring to the limited functionality of the technical platform, which needs its own proper treatment with planning, requirement gathering, and dedicated resources.

For example, Thoughtworks' startup teams often work with clients on automating customer onboarding. They might have a single-tenant solution with little automation. This starts off well enough — the developers can manually set up the accounts and track the differences between installs. But, as you add more clients, it becomes too time-consuming for the developers. So the startup might hire dedicated operations staff to set up the customer accounts. As the user base and functionality grows, it becomes increasingly difficult to manage the different installs — customer onboarding time increases, and quality problems increase. At this point automating the deployment and configuration or moving to a multi-tenant setup will directly impact KPIs — this is functionality.

Other forms of technical debt are harder to spot and harder to point to a direct impact, such as code that is difficult to work with or short repeated manual processes. The best way to identify them is with feedback from the teams that experience them day-to-day. A team’s continuous improvement process can handle it and shouldn’t require a dedicated initiative to fix it.